SPTDD: SharePoint and Test Driven Development, Part Two

Since I released Part One of this series into the wild there have been many heated discussions around SharePoint and Test Driven Development. As always, Andrew Woodward has a great deal to say on the subject; in fact, we recorded a SharePoint Podshow on this very subject at the European Best Practices conference (and traded a few well-meaning jabs during his presentation). During the discussion we came to the conclusion that there are some fundamental software development processes lacking in most custom development projects and that TDD, while we may disagree on its overall value within the quality assurance lifecycle, plays an important role in helping to improve the overall quality of custom software.

Recently, fellow SharePoint MVP Sahil Malik authored a post in which he dismisses TDD as having little to no value in SharePoint development projects. He summarized with the following quote: "So, TDD + SharePoint 2007? Well – screw it! Not worth it IMO". As usual, Sahil is nothing if not incendiary, but his basic premise is that the actual amount of code written for the average SharePoint project doesn’t warrant the overhead of a structured development process (he just happened to choose TDD in this instance). When considered from that perspective, I find myself in total disagreement with Sahil and in nearly complete agreement with Andrew’s post in response (which might come as a surprise to some readers). Put simply, Andrew argues that any form of unit testing, whether it be TDD or something else, is better than nothing – and I concur.

I also take issue with the basis of Sahil’s post; namely, that the amount of code written for any particular SharePoint project is comparatively small. I can produce two project plans for engagements we completed in the last year which demonstrate the exact opposite; in fact, the number of programming hours was greater than all other tasks combined by a factor of nearly 5. Why? Because we focus on building line of business applications on the SharePoint platform and not simply installing the product and whipping up a few web parts. It’s all a matter of perspective; it pains me when people make blanket statements about SharePoint who have never worked on a project outside of their little bubble. Everyone does something different with the product based on their area of expertise, which is one reason why governance is such a tricky thing to implement; one man’s intranet solution is another woman’s public facing media site and never the twain shall meet. Saying that code is a small part of most SharePoint products is ludicrous – just because it’s a small part of your work doesn’t mean it’s a small part of mine.

Getting back to the original point, if we alter the question from "Is TDD worth it?" to "Is Unit testing worth it?", I believe we arrive at an altogether different conclusion (assuming, of course, that you’re not a drone who thinks TDD and unit testing are the same thing – see my previous article). If one is truly interested in producing quality code then one MUST perform some level of Unit testing in order to insure atomic validation of compiled production code. Notice that I have never once taken issue with unit testing; in fact, I call it out as the first item in my Quality Assurance Lifecycle chain because that is where it belongs. I am a big proponent of unit testing and believe it should be included, as much as is practicable, in every development project. My issues are with TDD (and its adherents) not with the basic tenets of unit testing, which simply stipulate that all classes and methods must pass some sort of granular test case before being released to the next phase of the cycle. B-I-G difference.

Getting Back to Basics

Before we can determine whether or not the implementation of a particular process delivers value we must first determine what it is we’re trying to achieve. Andrew and I both argue that the point of all quality assurance methodologies is to produce better code. "Better" is defined, at least by my team, as fewer bugs, fewer failures, fewer exceptions, and fewer iterations through the subsequent QA phases than were encountered during the last cycle; or, in the case of a very mature process, similar metrics which are at least on par with an established baseline. There are, of course, numerous ways to make your code "better". If, for example, you are not doing any unit testing, then simply adopting this methodology will lead to improved results. Likewise, if you currently fail to perform integration or performance testing, doing so will increase the success rate of your code drops. But before you do any of that you must decide what, exactly, you are trying to achieve.

Let’s revisit the QA lifecycle again for clarity. Any successful software project moves through basically the same set of test phases: Unit, Functional, Integration, Regression, Performance and Usability. Often these phases are combined, especially the middle two (Integration and Regression) and the last two (Performance and Usability), but the first two always stand alone in isolation. As a general rule, Unit and Functional testing are mutually exclusive; that is, they are completely separate test methodologies requiring different test cases and different result metrics. It also stands to reason that while Integration testing may be combined with Regression testing it may never be combined with either Functional or Unit testing.

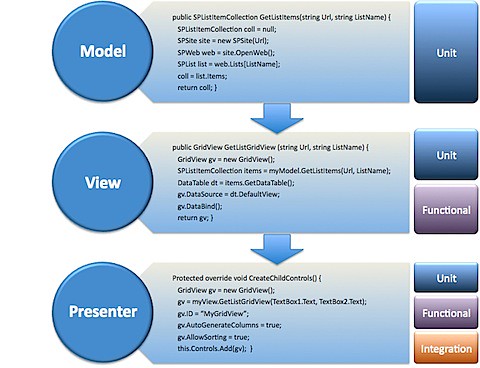

The inherent ROI of any single testing phase is dependent upon where in the underlying pattern the affected code resides. If, for example, a piece of Model layer code is being evaluated, then the importance of Unit testing is much higher than if the code were to exist at the Presentation layer (for any MVC- or MVP-like pattern). This is due to the fact that the multiple other layers build upon the basic Model code; get it wrong, and the ramifications will ripple throughout the entire project. But for Presentation code, the greatest impact of a defect occurs further down the chain, often in Functional and Integration but sometimes as late as Usability, thereby lessening the impact of lower-level testing. Although we cannot make a blanket statement that the lower in the pattern the more important the method or class, as some defects may exhibit the greatest impact during Performance testing (think disposal of objects and list iteration), we can use it as a general rule when evaluating the ROI of a test phase. In other words, if you’re not doing significant unit testing at the Model level then you are jeopardizing all the subsequent levels AND the overall QA cycle.

If a reduction in defects is our primary goal then the first question we should be asking ourselves isn’t "How much unit testing should we do?" but rather "Where should we unit test?". This focuses our efforts on those elements within our code that have the greatest surface area for defect propagation and leaves out those areas where a defect has little to no impact any further down the chain. Obviously, if we are following an MVC/MVP pattern, this dictates that our unit testing occur primarily at the Model layer, secondarily at the View layer, and finally, if at all, at the Presentation layer.

Measuring Value

Let’s bring this back around to the original argument, which is that Unit Testing (in a TDD manifestation) is not worth it when working with SharePoint. If the developer is truly following the guidelines established by the Patterns and Practices Group then he or she has already begun building even simple artifacts such as web parts according to an MVP pattern. If not, having a whole lot of tightly-coupled code is going to make the developer’s task much harder as they progress through varying levels of difficulty; it would be best to first refactor for at least a basic level of abstraction before implementing any type of structured QA process.

For purposes of this discussion, let’s look at a sample web part which displays SharePoint list items within a GridView control:

[NOTE: This is obviously sample pseudo-code; the methods are rough and there is no error handling. In addition, the View layer really should be completely abstracted from the SharePoint OM but I left the hook into SPListItemCollection there for brevity. Your mileage may vary.]

In this sample, the biggest payoff in unit testing occurs at the lowest layer. The most obvious reason for this is that the Model code is actually interacting with SharePoint so the less defects the better. In addition, memory consumption improvements can best be implemented in the base layer and any potentially catastrophic defects will be isolated from the rest of the code (meaning failures which result in the page failing to render, etc.). Finally, and perhaps not immediately obvious, is the fact that a very well architected Model layer can be reused across a large number of projects. This type of code reuse is one of the primary advantages of pattern-based development; when you measure the cost of creating the initial unit tests across multiple projects their value increases exponentially (as do the ramifications of a major defect). Note that no other types of testing are performed this low in the stack; as a general rule, if Model code includes any activities which directly impact functionality or integration then it should be rewritten to achieve a greater degree of abstraction.

In subsequent layers, Unit testing plays a diminishing role, due mostly to the fact that the objects being manipulated are well-known core ASP.NET controls (GridViews, DataTables, etc.); however, there are still opportunities to reduce the surface area for potential defects. Of immediate concern would be the calls to the Model layer, which should be validated, along with the objects being returned to the Presenter layer. It may be necessary, depending upon the business logic, to also insert some level of Functional testing at this layer, should doing so validate a potential defect area (such as making calls to external data sources or third-party assemblies, especially those which make updates or change another object’s state).

At the Presentation layer Unit testing has almost no ROI whatsoever. Sure, there are situations where validating this or that piece of UI code pays a dividend, but for the most part this is not the case. Test cases at the topmost layers should focus on user interaction through validation of functional specifications, process flow, usability, navigation, etc. At this point testing is almost entirely out of the developer’s hands (with the exception of visual elements which may not render correctly, behave erratically, or otherwise exhibit a relatively minor defect).

So how does all this play into the value equation when developing on SharePoint? The answer by now should be obvious – if you build an abstracted Model layer which effectively isolates communication with the SharePoint framework, you have created a valuable code element which can be reused across multiple projects. In order to insure that this element functions as expected – and that future updates and enhancements do not break the functionality of dependent projects – this code should be as testable as possible. Why? Because, as Andrew explains in our PodShow, testable code breeds confidence – confidence in the stability of the code base, confidence in other team members, and confidence in the process or pattern. I would also add that it makes potential impact of proposed changes measurable (something that is hard to achieve in most development projects) and provides a baseline for risk management.

I believe that the value proposition boils down not to whether you should or shouldn’t test but how much code should you write to ensure quality output (however that’s measured in your organization). I also believe, and this is the point at which Andrew, along with other TDD adherents, and myself begin to diverge, that validation of certain activities is better handled by the code itself and not by a fragile edifice of increasingly complex test cases. At some point you need to make a judgement call as to how much validation is enough and whether or not error-handling will suffice instead. You must also take a long hard look at how much time is being consumed writing test cases for situations that are either beyond your control, rarely encountered, or just too involved to make it worth the developer’s time. But when you measure the value of a shared code base that serves multiple projects and provides testable abstraction against the cost of achieving a level of validation that satisfies your own internal quality standards, I don’t believe there is any question as to whether or not Unit testing is worth the effort. In fact, I would argue that, due to the complexity of the SharePoint object model, the various undocumented and often erratic behaviors, and the level of skill required to effectively troubleshoot and optimize such code, Unit testing has more value in a SharePoint context than in any other.

Theory vs. Practice, Redux

As always, this discussion centers as much around theory versus the actual implementation of that theory in practice as anything else. A team can waste countless hours writing thousands of lines of test code and completely obliterate any chance of ever gaining a return on that investment. On the other hand, there is something to be said, especially in an Agile context, of getting code out the door as quickly as possible and dealing with defects only as they arise. Both approaches have merit, and you can generally find a few people willing to argue strongly for or against one or the other, but most of us fall somewhere in the middle.

The key to determining how best to tackle the problem is to first define what you want to achieve and then identify the most efficient and effective way to achieve that objective. Is there a hard cost for implementing a unit testing regime? Absolutely. But being smart about where and how to do it can greatly reduce the overall cost and operational overhead while still managing to satisfy quality standards. Is there a learning curve? There is if you’ve never done it before – potentially a very steep one. The payoff is that once you’ve learned the basic principles they can be applied across nearly every project in a unified manner, much like Six Sigma and ISO 9000 principles have improved manufacturing process across a wide variety of industries; the code changes but the process remains the same.

One very large pitfall that organizations must be careful to avoid, the discussion of which comprised the main thrust of my original post, is the tendency to overestimate the value of a given methodology. Unit testing can be a very good thing when used properly but the whole TDD religion can wreak havoc in its overzealousness and self-righteousness. Unit testing alone does not comprise the sum total of quality assurance in software development and its impact shouldn’t be overstated. Test cases are not a substitute for a well-defined and well-document API. Code should be written to meet functional requirements not to satisfy test cases. And mock objects can provide a very dangerous level of false security (more on that in a subsequent post). If you only do Unit testing and nothing else you will gain some level of additional quality but by no means will it be exhaustive; to achieve a full measure of confidence in the final product you must combine it with the other elements in the quality lifecycle and you must make quality the driving force behind every project.

I believe, like Andrew, that there is significant value in creating testable code that provides confidence in the software development process. While we may differ on the theoretical aspects and on various points related to the overall integration of Unit testing into a larger framework such as TDD, I think we can agree (and demonstrate in real-world scenarios) that the investment on the front end pays real dividends on the back end. To make a claim that SharePoint and TDD are incompatible, or that putting the two together is not worth the time and effort, is both disingenuous and misleading. I would much rather that my development team is doing some kind of testing – any kind of testing – over nothing at all. And if they’re going to do it, they should take a hard look at why they are doing it and formulate some kind of structured process. There’s no shortage of bad code and bad development practices out there; isn’t time we started talking about how to improve that situation instead of slagging off the entire effort?

“Sahil is nothing if not incendiary, but his basic premise is that the actual amount of code written for the average SharePoint project doesn’t warrant the overhead of a structured development process (he just happened to choose TDD in this instance). ”

I never said that. My comment was specifically about TDD. In fact, because TDD is less applicable to SP, you need to rely MORE on other techniques.

Brilliant!

The reason I have been banging on about TDD was not to expose TDD as THE way to Unit Test, it was to argue the case that ALL good development techniques should be considered when doing SharePoint development and statements like ‘You can’t unit test SharePoint’ can be dismissed easily.

I have been an advocate of better software quality my whole career, heck my first real job was to rewrite the development standards to meet ISO9001.

I’m totally made up that finally as a community we are not only taking these developments seriously, but they are putting the information out there and championing good development.

Eric Shupps you are now offically ‘MY HERO’

AndrewWoody

Coming from the unit testing world, and as a proponent of TDD, I need to remind people to always use their head. For thinking, that is. Be pragmatic, and find out what works for you, and if it doesn’t change it. It’s all about improving quality.

Thanks for taking a look at the entire life cycle of development.

When discussing TDD it’s often relevant to just the developer side of things, rather the entire quality of the system.

And, I can’t wait for the “why mocking is bad for you” part…

Keep up the good work!

Hi,

First of all thank you for both the parts – that’s a really well-argued insight, lots of food for meditation.

I just have a small thing to report: the sharepoint podshow link is broken – I think it goes like http://www.sharepointpodshow.com/archive/2009/06/29/test-driven-development-part-deux-the-rest-of-the-story-episode-23.aspx.

Regards,

Judith.

Hi,

First of all thank you for both the parts – that’s a really well-argued insight, lots of food for meditation.

I just have a small thing to report: the sharepoint podshow link is broken – I think it goes like http://www.sharepointpodshow.com/archive/2009/06/29/test-driven-development-part-deux-the-rest-of-the-story-episode-23.aspx.

Regards,

Judith.

“If one is truly interested in producing quality code then one MUST perform some level of Unit testing in order to insure atomic validation of compiled production code.”

What do you mean by “quality”? Because writing Unit tests after you have written the code will lead to, in almost all cases, more difficult to write Unit tests.

TDD on the other hand, directly leads to better quality code i.e. more cohesive and less coupled code, because the simplicity of the tests are directly reflected in your code.

I couldn’t agree more – TDD *is* a bunch of nonsense 🙂

Seriously, who said anything about creating unit tests after the fact? Unit tests should be planned for as part of the feature requirements and software architecture; in other words, you should know what needs to be tested BEFORE you start writing code. This allows the developer to plan their classes – and associated tests – in advance in order to insure maximum efficiency and code coverage.

In no instance has just doing TDD led to better quality code – it only leads to a perception on the developer’s part that they are producing quality code. Perception and reality are two entirely different things. Quality is measured directly against feature requirements not against specific code elements. In part one of this series I gave a specific example of how TDD can lead to poor quality code and I have seen similar situations occur in project after project where TDD was the focus instead of features.

The core tenet of TDD, “write the minimal amount of code required to make the test pass” is at fault here; if it were changed to “write the minimal amount of code required to meet the feature requirement” then these problems would not exist. Test cases do not determine code quality – only progression through the entire quality lifecycle can insure that. Unit tests do not result in good software but they do provide critical insight into a single atomic level of the QA lifecycle. Their importance should not be overstated nor elevated into an entire theology. Use them where they provide the most payback and make sure they are integrated within the overall process.